Reddit's AI Knows If You Criticize Political Figures

The platform is using AI to build behavioral profiles, categorizing their users' views and attitudes without consent

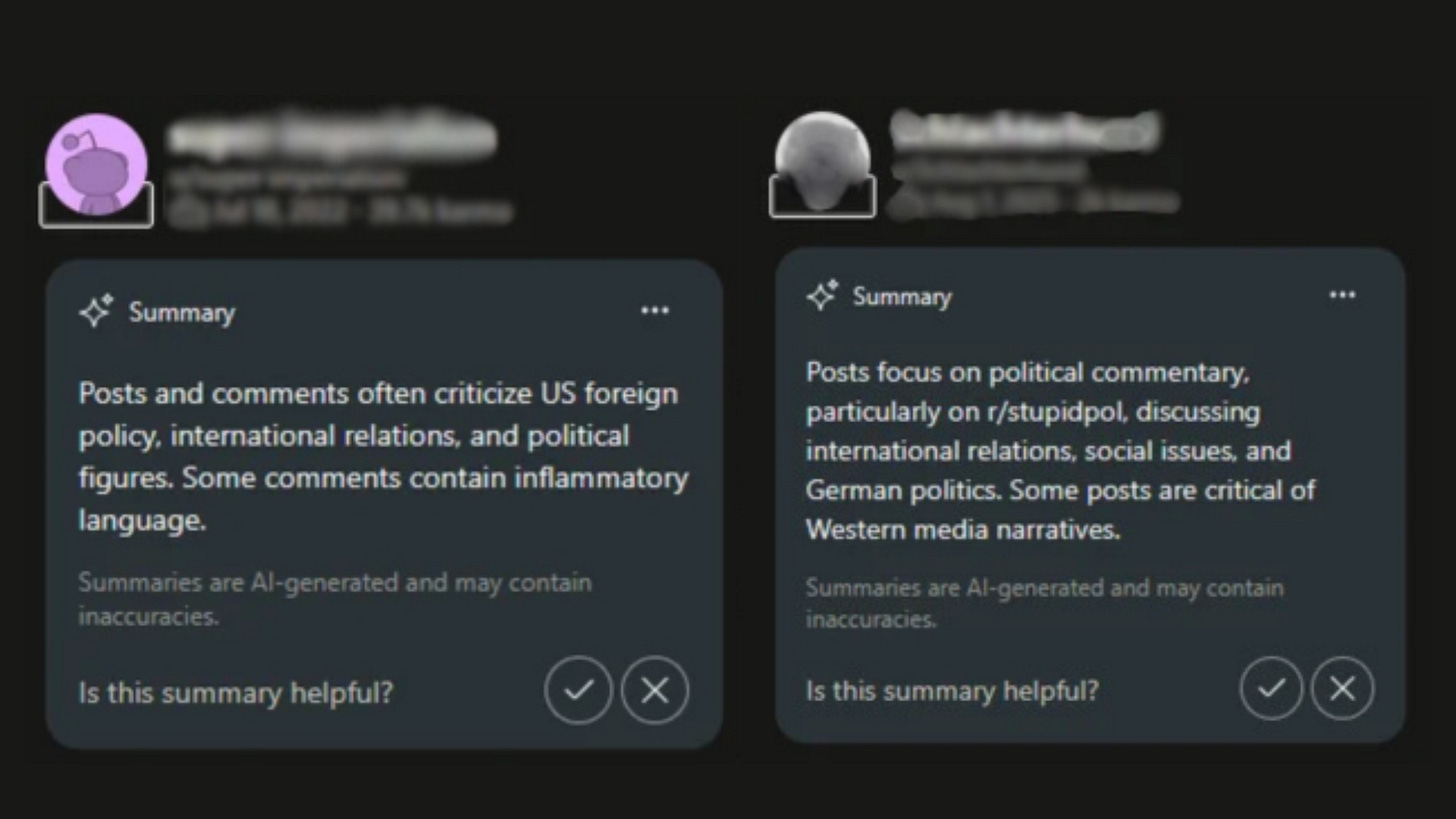

Reddit has been quietly profiling its users. Over the summer, the company rolled out an AI tool that automatically generates summaries of users’ posting histories for moderators. The summaries include labels like “often criticize political figures” or “emotionally reactive”—judgments about behavior, tone, and ideological leanings that users themselves cannot see.

There was no press release, no user notification, no opt-out. The company simply deployed the feature, documented it in a changelog buried in its help center, and let it run. Most users only found out when moderators started discussing it, sharing screenshots of what the AI was saying about people who had no idea they were being algorithmically assessed.

The feature sounds innocuous enough. Volunteer moderators managing thousands of users could certainly use help sorting through comment histories. One Reddit admin running early tests in 100 communities called it a “game-changer for efficiency.” A moderator shared their own AI-generated summary: “Frequent posts in r/tomorrow focus on Nintendo Switch, gaming, and Reddit tips. Content is generally helpful and engaging.”

But the AI isn’t just summarizing content. It’s making judgments. Examples circulating on Reddit show the system noting things like “often criticize US foreign policy” alongside assessments of users being “critical of Western media narratives”. The algorithm isn’t compressing information—it’s evaluating character, predicting behavior, and flagging ideological positions. All of this happens invisibly to the users being profiled.

What makes this significant isn’t the technology itself. Large language models can analyze text and identify patterns, that’s table stakes now. What matters is that Reddit has fundamentally changed the economics of reputation assessment. Reading through someone’s posting history to understand their behavior used to take time and effort. That friction created a natural limit on surveillance. Now that friction is gone. Evaluating any user’s complete behavioral profile costs essentially nothing and takes no time at all.

This shift from expensive to free changes everything downstream. When an action that once required human judgment and effort becomes automated and instant, you don’t just make the existing system more efficient. You enable entirely new behaviors that weren’t possible before. Reddit has effectively made user profiling a zero-cost operation at scale.

The Surface Effects Are Already Visible

The immediate impact lands on Reddit’s volunteer moderators, who run the platform’s 100,000-plus active communities. Instead of manually reviewing post histories—tedious work when you’re managing thousands of daily interactions—they now get instant behavioral summaries. For moderators dealing with spam, trolls, and coordinated harassment campaigns, this looks like a straightforward improvement. Catching bad actors faster means healthier communities.

Some moderators have welcomed it. “I really like it,” one commented, noting it “gives a handy heads-up on users.” But others have been more skeptical. A Reddit admin testing the feature admitted, “I gotta say it’s a pretty useless feature... sometimes it’s wrong or just too generic... I’d rather read the full context.”

The bigger concerns run deeper than whether the summaries are useful. One issue is bias. If an AI labels someone as “often critical of police,” does that make moderators more likely to view their future comments through that lens? Research on confirmation bias suggests yes—once you’ve been told to look for a pattern, you see it everywhere. The AI summary doesn’t just inform moderator decisions, it potentially shapes them by priming expectations about who users are and what they’re likely to do.

Then there’s the consent problem. This feature went live without any user notification or opt-out mechanism. As one frustrated user in r/privacy put it, Reddit deployed this “without consent, transparency, or opt out,” creating a system where users are being profiled and categorized based on “conduct, style, and ideological affiliations.” That language matters. Reddit built its reputation on pseudonymity and the ability to participate in different communities without a permanent record following you around. An AI-generated reputation that travels with your username undermines that model.

The privacy implications extend beyond Reddit itself. Users in some jurisdictions are now asking whether they can request their AI-generated profiles under data protection laws like GDPR. If these summaries qualify as personal data being processed through automated decision-making, Reddit may be legally required to provide access, allow corrections, and obtain explicit consent. The company’s decision to deploy first and address legal questions later may prove costly.

But perhaps the most immediate concern is how this changes the experience of using Reddit. When you know an algorithm is watching everything you post and building a profile that moderators can see, you start editing yourself. That’s not paranoia, it’s a rational response to surveillance. Communities that depend on open discussion about controversial topics, personal struggles, or political dissent face a chilling effect. Users won’t necessarily leave Reddit. They’ll just participate less freely, knowing their words are being fed into an invisible judgment system.

What Happens When This Scales

Right now, AI summaries are a moderator tool. They’re advisory, not enforcement mechanisms. They affect how moderators see users, not how the platform itself treats them. But that boundary is unlikely to hold. Once you have behavioral profiles on 100 million users, the temptation to use that data more broadly becomes overwhelming. The second-order effects of scaling this system reveal how fundamentally it could reshape Reddit.

Consider how users will adapt. The moment people understand they’re being algorithmically profiled, behavior changes. Some will self-censor, avoiding topics or tones that might generate negative labels. Others will game the system, deliberately posting innocuous content to build a positive profile before engaging in the behavior they actually care about.

These responses represent a shift from authentic participation to performance. The platform becomes less about genuine discussion and more about managing your algorithmic reputation. Communities that currently thrive on raw, unfiltered conversation—ask-me-anything threads, support groups, niche hobby forums—will feel this shift most acutely.

Moderators will change too. With AI doing the pattern-recognition work, a single moderator can oversee far more communities than before. That might sound like improved efficiency, but it also means power consolidates. Reddit has already struggled with the problem of “power mods” who control dozens of major subreddits. AI tools don’t solve that problem, they make it worse by removing the natural limit of human time and attention. When moderating becomes cheap and scalable, the people who already hold power can accumulate more of it.

Then there are the bad actors. Any system that relies on behavioral signals can be gamed, and sophisticated actors will find the exploit. Imagine a spam operation that spends months building positive AI profiles by posting helpful comments and engaging genuinely with communities. Once those accounts have clean reputations, they get deployed for coordinated manipulation: vote brigading, astroturfing, disinformation campaigns. The AI summary says they’re trustworthy contributors, so moderators and algorithms give them more leeway.

Or consider the opposite attack: targeting users you want to discredit by triggering negative AI labels. Coordinate a group to argue with them across multiple threads until the algorithm flags them as “combative” or “disruptive.” Once labeled, their legitimate contributions get dismissed or hidden. This kind of reputation warfare becomes possible when behavioral profiles are both persistent and consequential.

Reddit will inevitably respond by tuning the AI to detect gaming and manipulation. Bad actors will adapt. The platform enters an endless cat and mouse game, with each iteration of the algorithm spawning new adversarial tactics. We’ve seen this dynamic play out with Google’s search algorithm and Facebook’s content moderation. There’s no reason to think Reddit will avoid the same cycle.

The Business Model Makes This Inevitable

Understanding why Reddit is doing this requires looking at the money. Earlier this year, Reddit blocked the Internet Archive from indexing its content and restricted API access after discovering that AI companies were scraping Reddit data for free. The platform then signed licensing deals reportedly worth $60 million annually from Google alone to provide that same data legally.

Reddit has more than 40 billion comments in its archive. That’s training data at scale, representing real human conversations across virtually every topic imaginable. For AI companies building large language models, Reddit is invaluable. But the value depends on quality. Spam, harassment, and low-quality content dilute the dataset. Reddit has a strong incentive to demonstrate that its data is clean, well-moderated, and reliably categorized.

AI-powered moderation tools serve that goal. They help Reddit scale content quality without proportionally scaling human moderator costs. They generate metadata about users and content that makes the platform more valuable to data buyers. And they position Reddit as not just a content archive, but an actively curated, AI-enhanced dataset.

This also explains Reddit’s recent push into AI-powered products for advertisers. The company launched ”Reddit Insights,” which uses AI to analyze conversations and surface trends for marketers. User profiling fits naturally into this strategy. If Reddit can tell advertisers not just that a user participates in gaming communities, but that they’re “enthusiastic about Nintendo” and “responsive to helpful content,” that’s a more valuable ad product.

None of this is necessarily sinister. Reddit needs revenue, advertisers want better targeting, and AI companies need training data. But it does mean user profiling isn’t a side feature that might get rolled back if people complain. It’s core to Reddit’s emerging business model. The company has structural incentives to expand AI profiling, not limit it.

That creates pressure to use the data more broadly. Once you’ve invested in building behavioral profiles, the marginal cost of using them for purposes beyond moderation is low. Personalized content recommendations? Already have the data. Ad targeting? Same data works. Shadow-ranking users based on quality scores? Might as well, since we’re profiling everyone anyway. Each additional use feels incremental, but collectively they transform what Reddit is.

The trajectory points toward Reddit becoming less of a neutral platform and more of an actively managed ecosystem where AI systems make invisible decisions about who gets heard and what gets seen. That might produce a “better” Reddit by conventional metrics, like less spam, less harassment, more engaging content. But it would be a fundamentally different Reddit than the one that became culturally significant.

The Risks Beyond Reddit

One angle that hasn’t received much attention: what happens when governments notice that Reddit has detailed behavioral profiles on millions of users, including political views and ideological leanings? Authoritarian regimes already surveil social media to identify dissidents. What Reddit is building would make that surveillance dramatically easier.

Consider how this could work. A government approaches Reddit demanding information about users who criticize the regime. Reddit might refuse direct cooperation, but if the platform is already generating summaries that flag users as “critical of government” or “supportive of opposition movements,” the work is partly done. Even if Reddit doesn’t hand over data, the existence of these profiles makes them a target for hacking, subpoena, or coercion.

The AI isn’t making fine-grained distinctions. It’s pattern-matching. A user who posts in political discussion forums, uses certain keywords, or engages with opposition content gets flagged. The summaries aren’t designed to identify dissidents, but they create the infrastructure that makes identification trivial. Once that infrastructure exists, repressive governments will find ways to access it.

Reddit operates globally. Users in countries with weak rule of law have no guarantee their behavioral profiles stay private. Even in democracies, law enforcement and intelligence agencies increasingly demand access to social media data. Reddit’s AI summaries could become exactly the kind of pre-processed intelligence that makes mass surveillance scalable.

This isn’t hypothetical. We know authoritarian governments already use social media monitoring to track dissidents. We know data breaches happen. We know governments issue national security demands for user information. Adding AI-generated behavioral profiles to the mix doesn’t create new surveillance capabilities, it makes existing ones far more efficient. The cost of identifying politically problematic users drops to nearly zero when an algorithm has already done the categorization work.

Reddit likely hasn’t thought through this risk carefully. The feature was designed for content moderation, not political surveillance. But once you build a system that profiles users based on their expressed views, you can’t control who ultimately gets access or how it’s used. Intent doesn’t matter when the infrastructure enables abuse.

What Comes Next

Several developments seem likely over the next 6-12 months. Reddit will face pressure—from users, privacy advocates, and potentially regulators—to make AI summaries more transparent. Expect some kind of privacy control that lets users see or limit their profile, though probably not opt out entirely. The company needs the data for its business model.

We’ll also likely see AI summaries expand beyond moderators. Reddit has already launched Reddit Answers, an AI-powered search feature that summarizes discussions. Surfacing user profiles to regular users—perhaps showing a quick summary when you hover over someone’s name—would be a natural extension. The company will probably A/B test this as an opt-in experiment first, framing it as a way to understand who was discussing the topic.

The bigger shift could be less visible. Reddit could start using behavioral profiles to inform algorithmic decisions about content distribution. Not explicitly—there wouldn’t be an announcement that user quality scores now affect ranking. But engineers will add reputation signals as features in their recommendation models, and over time those signals will shape what content surfaces. Users will notice their posts seem to get less traction or that certain voices dominate discussions more than before, but the cause won’t be obvious.

Competitors will be watching closely. If Reddit demonstrates that AI-powered user profiling improves engagement metrics or moderator efficiency without causing major backlash, expect Twitter, Discord, and others to follow. Conversely, if Reddit faces serious regulatory trouble or user exodus, competitors might position themselves as privacy-respecting alternatives. Either way, Reddit is running the experiment that will shape industry norms.

Regulators may move faster than expected. Europe’s GDPR and the emerging AI Act have strict requirements around automated profiling and decision-making. If Reddit’s AI summaries are deemed to constitute automated processing of personal data—especially sensitive categories like political views—the company could face enforcement action. That might force Reddit to disable the feature in Europe or implement heavy restrictions that make it less useful globally.

The wild card is whether Reddit’s users actually care enough to change behavior. Past controversies on Reddit have generated massive outcry but limited long-term impact. The 2023 API protests shut down major subreddits for days, but most users eventually returned. Complaining is easy; leaving is hard, especially when there’s no obvious alternative at Reddit’s scale. Reddit is betting that users will grumble about AI profiling but ultimately accept it, because the platform is too useful to abandon.

That bet might be right. But it also might not account for cumulative erosion of trust. Each individual change—API restrictions, AI profiling, increased advertising, content licensing deals—seems manageable in isolation. Together, they paint a picture of Reddit becoming more extractive and less community-focused. The users who made Reddit valuable aren’t leaving suddenly. They’re just participating less, posting less, caring less. By the time Reddit notices the problem, the damage may be hard to reverse.

The Deeper Pattern

What’s happening at Reddit isn’t unique. Platforms everywhere are wrestling with how to integrate AI while maintaining the human elements that made them successful. X and Threads have been creating AI-generated summaries of trending topics. Discord offers AI moderation tools. Stack Overflow is fighting back against AI-generated content while trying to figure out how to use AI productively to answer questions.

The common thread is that AI makes things that used to be expensive—content moderation, reputation assessment, behavioral analysis—nearly free. That economic shift creates pressure to automate. Human moderators are slow and don’t scale; AI is fast and handles billions of interactions. Authentic human judgment is valuable but costly; algorithmic assessment is cheap and consistent. Every platform faces the same calculation: how much humanity can we replace with automation before we lose what made us valuable in the first place?

Reddit is further along this path than most, partly because it has stronger business incentives to profile users. The data licensing deals create pressure to demonstrate content quality. The advertising business creates pressure to enable better targeting. The competition with Google for search traffic creates pressure to deploy AI-enhanced features. All of these push in the same direction: more profiling, more automation, more algorithmic control.

The outcome probably isn’t that Reddit becomes an AI-dominated dystopia where human moderators disappear and users are sorted into reputation tiers. The more likely path is gradual drift. Features get added incrementally. Each one seems reasonable in isolation. Together, they slowly transform what the platform is and how it functions. Users adapt, moderators adjust, and eventually the new normal feels natural—even though it bears little resemblance to what Reddit was five years ago.

That drift matters because Reddit occupies a unique position in the internet ecosystem. It’s where people go for unfiltered human opinion. It’s where niche communities gather around shared interests. It’s where knowledge gets built collaboratively through discussion. If Reddit becomes just another algorithmically curated feed optimized for engagement and advertiser revenue, that’s not just a loss for Reddit users. It’s a loss for the internet as a whole.

The question isn’t whether AI will be part of Reddit’s future—it clearly will be. The question is whether Reddit can integrate AI in ways that enhance community and conversation rather than replacing them. So far, the evidence suggests Reddit is choosing efficiency and revenue over authenticity and trust. That might be the right business decision. But it’s worth being clear about what’s being traded away.

Reddit’s AI is already judging users. The profiles exist, the labels are being applied, and the infrastructure for scaled behavioral analysis is being built. What happens next depends on choices Reddit makes about transparency, consent, and the appropriate boundaries for algorithmic assessment. But it also depends on whether users, regulators, and competitors push back hard enough to force different choices.

Right now, the path of least resistance leads toward more profiling, more automation, and more invisible algorithmic control. Changing that trajectory requires recognizing what’s at stake: not just privacy in the abstract, but the ability to participate in online communities without every word you write feeding into a permanent behavioral record that shapes how you’re treated. That’s the real change Reddit’s quiet AI deployment represents. Everything else is downstream from there.